The report “Pasado, presente y futuro de la monitorización” explores the most relevant aspects of the history of IT monitoring and describes the concepts that have most influenced its evolution over time.

If you wish, you can download the full report for free:

The following sections summarize the main ideas of the report, published in the second edition of the itSMF Spain magazine.

The beginnings of IT monitoring

At the beginning, IT monitoring was a way to check the availability of servers and the connectivity of devices. It soon came to be used in the areas of hardware and operating systems, among others.

This type of monitoring, also known as device-centric, offers an individualized analysis of the status of devices. It compares the data obtained with pre-established parameters. If the values are not within these parameters, the tool generates an alert.

However, device-centric monitoring has several drawbacks. Among them, the lack of context and global view or the generation of a large volumes of data are not always useful. Still, many companies continue to use this type of monitoring today.

Later on, there was a shift toward specialization in monitoring, with different departments or teams managing different specific tools. These tools provide more details on concrete aspects, such as infrastructure or applications.

Complementarily, tools that concentrate all the relevant information and generate “complex alerts” began to appear. With them, the term telemetry gained prominence. Telemetry encompasses the concepts of events, metrics, logs and traces, and it is defined as “the entire set of relevant data for monitoring, regardless of the tool that facilitates them and their format.”

But this type of monitoring is not without its drawbacks either. For starters, specialization and decentralized management do not facilitate problem solving. Besides, although specialized tools generate a significant amount of information, that does not mean it is easier to understand and interpret, and it can lead to storage issues. Furthermore, this level of specialization is still missing a global view of all aspects monitored.

As mentioned in the report, “the objective should not be to monitor many parameters, but to identify those that are adequate, meaningful and relevant, within a model that provides different levels of analysis.”

ITSM Monitoring: Based on services and managed by process

ITSM process and service monitoring offers an aggregate view not provided by the previous types. In line with the key aspects of ITIL and ISO 20000, this type of monitoring puts the focus on services. Specifically, it verifies overall compliance with the service levels established in the SLA, regardless of the actual status of its individual components.

The level of aggregation inherent in this type of monitoring facilitates interpretation by business users. It also allows for a multidimensional analysis through the monitoring of the service levels of each process. In addition, it favors the identification of “cause-effect relationships”, offering a more predictive monitoring.

The level of aggregation inherent in this type of monitoring facilitates interpretation by business users. It also allows for a multidimensional analysis through the monitoring of the service levels of each process. In addition, it favors the identification of “cause-effect relationships”, offering a more predictive monitoring.

The report highlights capacity management and availability management as the most common ITSM processes in IT monitoring, explaining their main characteristics and the differences between them.

Although this type of monitoring is far more mature than the previous ones, its main disadvantage is that there are no precise instructions, neither in ITIL nor in ISO 20000, on how to implement it. Therefore, the organization is responsible for the identification of key performance indicators (KPIs) and the definition and implementation of a customized model.

In response to this problem, BSM (Business Service Management) tools were born. These tools sought to satisfy the needs of the business, and they had a clear orientation to services and to management by processes. They also provided a systematic approach to ITSM monitoring.

However, the life of BSM tools was quite short. Due to the difficulties associated with their implementation and the technological deficiencies at the time, their level of adoption was quite low. Finally, a report published by Gartner in 2016 declared them obsolete.

Current trends in IT monitoring

The report mentions several industry trends currently affecting IT monitoring. Among them:

- Increasing migration of infrastructure, platforms and services to cloud environments.

- On-demand resource provision.

- Container orchestration environments.

- Software architectures based on microservices.

In reaction to these trends, several specific cloud-native monitoring tools have emerged.

Another trend that affects IT monitoring is the application of Agile, both to software development and to other areas. The report raises the question “Can product orientation and service orientation coexist?“

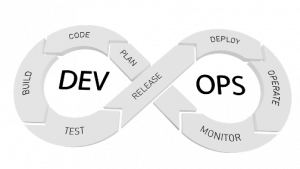

There is also the DevOps movement, closely related to Agile. DevOps introduces a life cycle that encompasses development and operations, providing a framework for the implementation of practices that promote collaboration between these two areas.

Agile and DevOps provide flexibility and horizontality to processes, resulting in reduced times and greater value for the business. As for monitoring, these trends augur a “shift-left” in which developers will play a key role in identifying aspects to be monitored and guaranteeing that applications are traceable.

Later, the report compares DevOps with Google’s Site Reliability Engineering (SRE), explaining the main aspects of the latter and highlighting its focus on reliability.

The concept of observability is also mentioned. It is defined as “the ability of an application or a system to offer useful information to the outside about its internal state”. According to the report, observability is the basis of monitoring and analysis activities.

Lastly, the report also talks about user experience monitoring. This type of monitoring incorporates technology that allows for the observation of users’ experience as they interact with the service or resource.

AIOps: The future of IT monitoring

Beyond the influence of the trends already described, what does the future of monitoring hold for us? According to the report, the emergence of AIOps, currently in the early stages of its development, is the latest step in the evolution of monitoring to date.

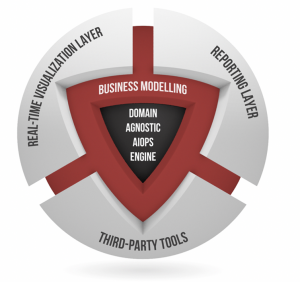

AIOps refers to monitoring tools that incorporate artificial intelligence into operations, allowing for the systematization and automation of tasks. Considered an evolution of BSM, AIOps leaves a large part of the analytical process to artificial intelligence.

In addition to providing end-to-end visibility, displaying indicators in real time, and performing predictive analysis to detect anomalies, AIOps tools include prescriptive functionalities, such as recommendations and self-repair.

In addition to providing end-to-end visibility, displaying indicators in real time, and performing predictive analysis to detect anomalies, AIOps tools include prescriptive functionalities, such as recommendations and self-repair.

These tools can be classified into two groups. If they only process the data they generate themselves, they are domain-centric. Conversely, those that can be integrated with other sources of information are called domain-agnostic.

Obsidian’s innovative intelligent monitoring platform is among the latter, and it includes a business modelling layer, as well as dashboards for displaying indicators.

Access to the full report

If you wish, you can download the full report for free. You only have to log in with one of the following social network accounts:

Further information

If you would like to learn more about the Obsidian intelligent monitoring platform and its functionalities, please contact us via the attached form.

You can also subscribe to our newsletter or to our YouTube channel, which currently has 1580 subscribers. You may also be interested in reading our blog, as well as the news section of our website, in order to find our more about Obsidian. Lastly, you can follow us on LinkedIn, Facebook and Twitter.

The personal data collected via the form will be used to process your request. You may exercise your rights of access, rectification and cancellation by writing to Obsidian Soft, C/ Méndez Álvaro, 20 · 18045 · Madrid.